Google Sheets as a database

Using Sheets for collecting signups, dumping error logs, and database shenanigans

Github: https://github.com/janzheng/sheetlog

Demo: https://sheetlog.deno.dev/

Live Google Sheet for testing: https://docs.google.com/spreadsheets/d/15XoANPN-DAyBkQlN9-s7bCaWzDNibuWTXHFCQMISVK4/edit?gid=1483772620#gid=1483772620

Every app needs to store data.

Even the simplest notes apps and todo apps need to save their data somewhere.

For web apps, there are already plenty of ways to save data. There are browser options like Web Storage API and IndexedDB, simpler databases like Neon or Turso, and more complete solutions like MongoDB Atlas and Supabase. The browser options only work for a few use cases, and the database solutions can either be expensive or complex.

These tools make sense if you’re building a SaaS startup, but are way heavy for the “home-grown apps” movement.

Sometimes you just want to save a few rows of data. Other times you want a way for everyone to look at and edit the data. That’s where Google Sheets shines!

I build dozens and dozens of tiny homegrown apps to fit my needs, like bookmarking apps, tweet drafting tools, and event sign up websites. I really don’t want to manage a Supabase instance for each project, for what amounts to a couple of megabytes of data.

Then why use a database at all? I do go to IndexedDB as a first choice (with Dexie), but sometimes I want to collect data from others (a comment form), collect data from mobile (bookmarking and notes apps), and share the data with others (pot luck registration page). If I used Supabase, I’d have to design the data output. Why go to those lengths when Sheets already does that well?

On paper, Google Sheets looks like a great cross between a lightweight “database” and the perfect data management tool. They have a highly performant grid UI, and they have a very good collaboration and sharing system. And it’s completely free!

The problem? The official Google Sheets API is incredibly hard to work with. Even setting up the API through GCP is painful and complicated. I’ve tried that path but given up several times — until I found SpreadAPI.

SpreadAPI basically outlines how to set up a Google Apps Script as an authenticated server for any Google Sheet. The website provides the Apps Script code, plus instructions on how to deploy the script as a web application. This part is really important, as the major insight of SpreadAPI is that any Apps Scripts can essentially run as a server instance — which means that any tool can interact with it in any way!

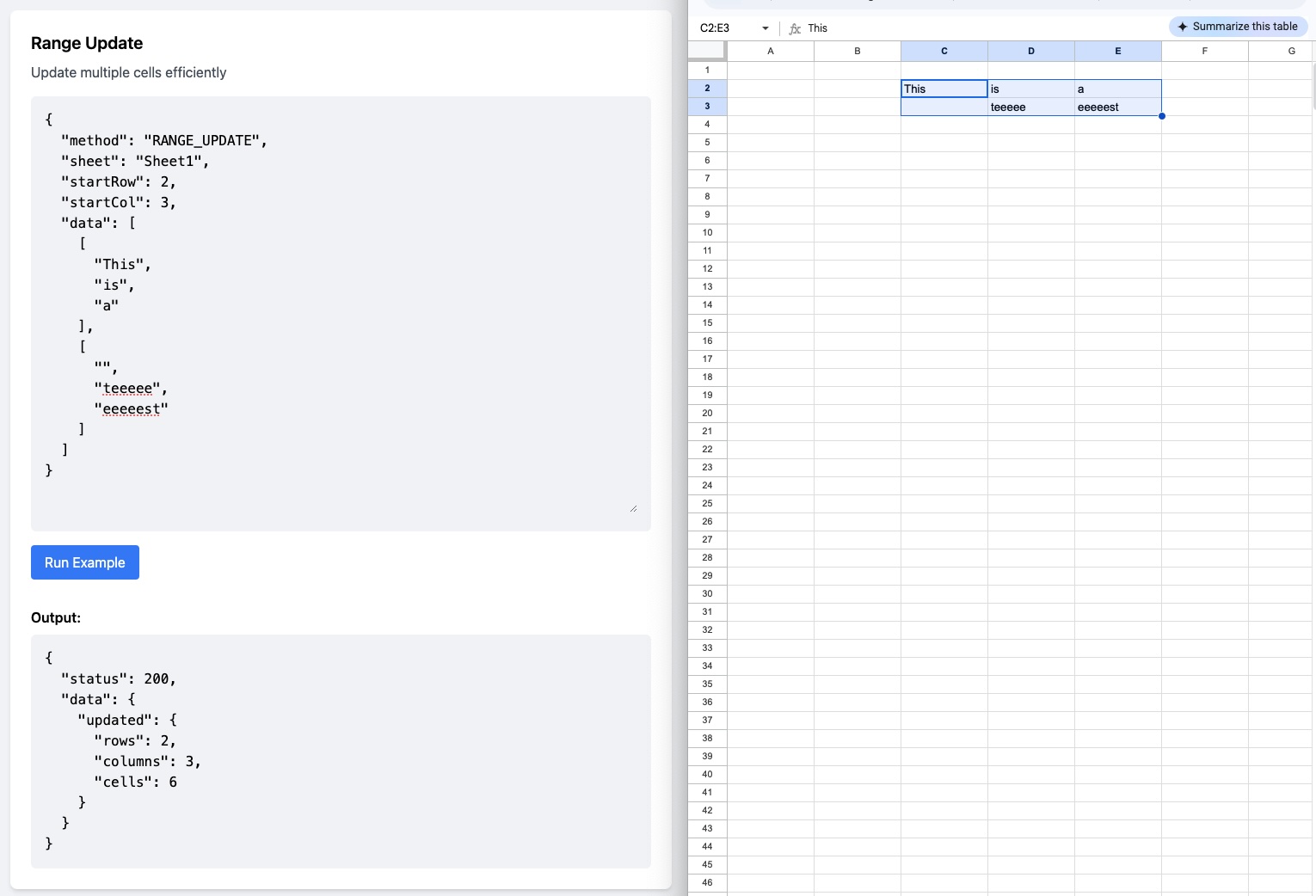

Sheetlog is a complete rewrite of SpreadAPI and adds a few more features like range updates, batch operations, CSV download, and more.

Originally when I built Sheetlog a few years ago, I intended to use it to track console.log outputs into Google Sheets as a sort of lightweight log drain / sentry.io system.

Since then the scope of the project, and it now handles full database-like CRUD functionality, Sheets-like CSV support, and column and row-based read and write operations. This means we can create multiple ranges of blocks of data in a single spreadsheet, which we can read and write from. While harder to parse and work with, this type of data is really common in finance and microbiology sheets and dashboards.

talk about cons like speed — search and access speed is atrocious, but you can cache, and you can use the CSV method by getting the tab’s CSV. Way faster than doing individual searches from Apps Script

My Bookmarks sheet is humming away at about 30,000+ links at this point. It’s been running for years, and my system parses long-form pages into markdown, which gets stored in my Google Sheet. It’s been a boon for looking up AI tools and design references I’ve found years ago. I use it every day.

You can do a ton with Google Sheets — I think they support something like 10M cells, and each cell can hold up to 50,000 characters. That’s an absolute ton of data! That’s 500MB! But I think if you push Sheets to that limit you’re probably likely going to run into a lot of performance issues.

Speaking of performance issues — Sheets starts misbehaving and acting much more slowly at around a couple of thousand rows — and operations like getRows and find can get quite slow. Honestly for large sheets, the best way to squeeze some speed out of the system would be to read the entire sheet as a CSV, cache that CSV, then parse the CSV in memory

Regardless, once you do have many thousands of rows and columns of Sheets data, you should probably consider moving your app onto a “real” data platform like MongoDB anyway.

Cheers!